Remember I said it was time for some experiments?

Well, they started.

TestingSaaS goes green IT, just like its logo.

It started with a spark, erupting into a flame and last Wednesday a flow started to spread.

How did this happen?

The spark which erupted the flame: a book called Green IT

A few months ago I was walking through my favorite bookshop in Oss like Ernest Hemingway did a hundred years ago in the famous bookshop Shakespeare and Company in Paris. I always relax here, just browsing through books. Then I saw a book called Green IT by Jan Hoogstra and Eric Consten.

While reading the cover I got intrigued and I bought it. Yeah, the current IT (especially AI) is having a problematic impact on the climate. And this book gives , next to some theory, also practical examples how to decrease this impact. Software, hardware, networks, data centers and utilities, they all can help this do it. Companies and organisations like TNO, AFAS and the government are doing it.

This got me thinking, how could I help with my company?

Not only by getting more sustainable with my company, but also more as a real player in this new field.

A spark became a flame.

Then I met Wilco Burggraaf on LinkedIN.

The flow: Meeting the people from Sustainable IT Netherlands and the Green Software Foundation

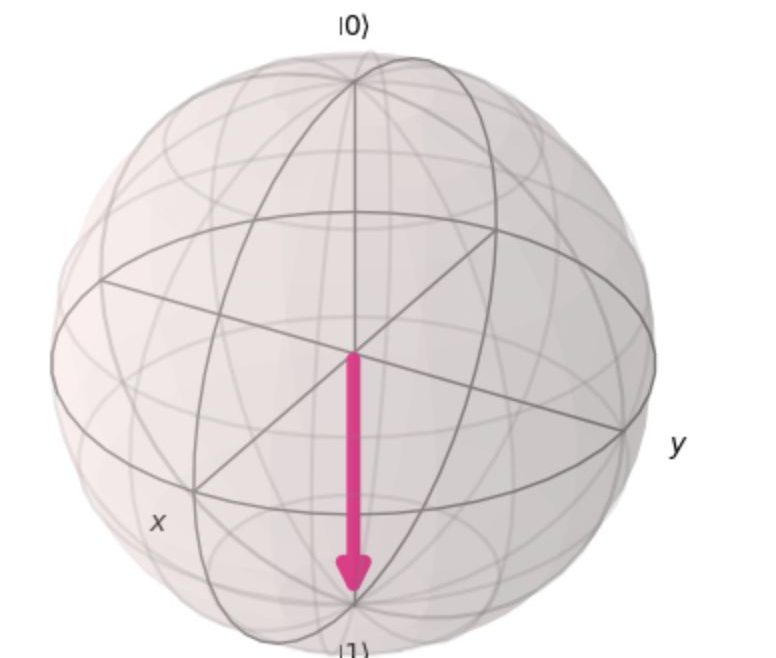

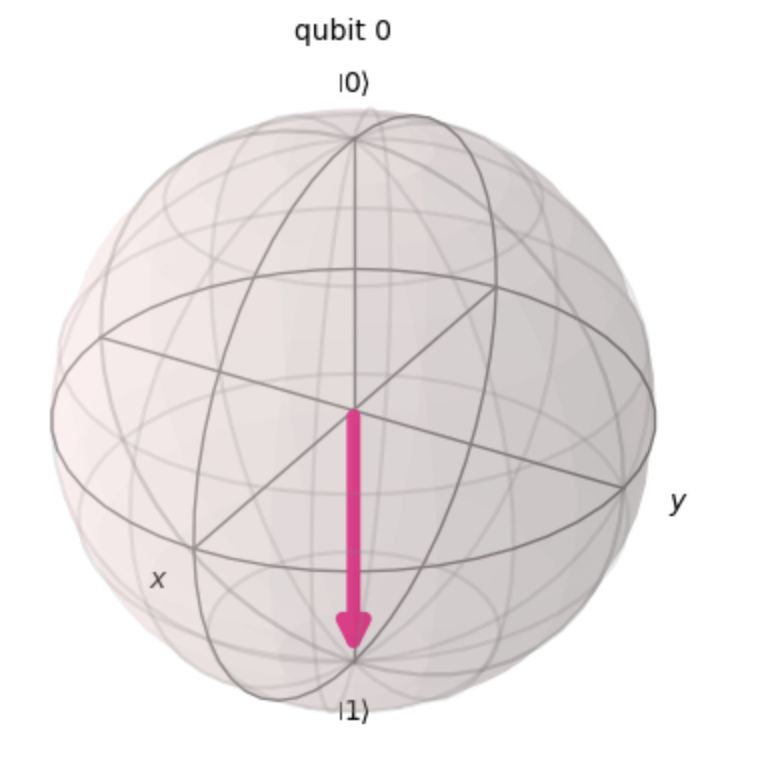

Following Wilco, the Dutch Green Software Champion, expanded my network in Green IT, with people like Robert Keus, a social entrepreneur revolutionizing the way technology intersects with society. And developing a first green AI chat, reducing the impact AI has on our environment by running on sustainable infrastructure and by repurposing heat. Chatbots eeh, where did I do that before ?

Man, I had to meet these people, but how? As an entrepreneur I’m also quite busy.

Let’s see if there are meetups where they are involved, and yes, there are.

One of them was last Wednesday, the 28th of May 2025. A meetup from Green Software Foundation and Sustainable IT Netherlands communities, where passion for technology converges with a shared commitment to sustainability.

Hosted by Thorsten Picard at Capgemini HQ Utrecht.

This was the time to get that flow going!

The Green IT flow

The evening was wonderful. I finally met Wilco and Robert and a lot of other people, a real organic gathering.

I heard about the ‘Green Software Foundation’, and I was very happy to also meet people from ‘Sustainable IT Netherlands’. Corina Milosoiu and Chris Stapper, very delighted to have met you.

But a meetup is not a meetup when there are no talks.

Robert kicked it of, together with Cas Burggraaf, an energetic and eye-opening session, which proved that a talk about GenAI can be about so much more than just numbers and figures.

Then the stage was ready for a lighthearted talk by Mirko van der Maat from Capgemini about Sustainability in Architecture and Barbapapa. Oh man, Barbapapa, forgotten memories.

At the end it was time for some pitches, which were received very well.

But hey, what is a meetup without some drinks and snacks, very well facilitaded by our host?

Time for some networking. Great talks with very passionate people with one thing in common: Green IT!

TestingSaaS going green: the future?

Ok, we had a spark that erupted in a flame, becoming a flow.

Well, I want a good Aussie bush fire, I want to create a flood.

Yes, I remember your books Rijn Vogelaar.

And I can’t do it alone.

With my new friends from the Green Software Foundation and Sustainable IT Netherlands communities I can.

How? By Creating Content through Testing!

To be continued!!